· Autonomous gestion

· Robot Control

Robot Gestion· Research & Ergonomy

· Dual screen

· Map gestion

· Dashboard gestion

· Emergency case

Robot Control· Research & Ergonomy

· Tests & Experiments

· Immersive Bubble

· Controller

· New Robot

· Inverse kinematics

Solution· Video presentation

· Evaluation

· Lesson learn

March 2021 to June 2021

Tauros

Mixed Reality · Remote Robot Control

At a glance

For a student project, we were divided into groups of 4 students to work on the topic “The relation between a robot and a human in a remote working environment” For this project we focused on the user experience as well as all the steps he will follow to complete his mission when managing and controlling a remote robot

Team

Software

Blender 3D

After-Effect

Illustrator

Photoshop

Figma

Miro

What I did

UX·UI design

Information architecture

Interaction design

Industrial design

User Research

Product design

3D modeling & animation

The problem

Today, workers on offshore platforms are in constant danger when handling tools or in case of explosion. To protect the workers we are interested in decentralizing the employees into remote platforms where they can control robots remotely and be safe at the same time

The Solution

An immersive work experience to help them focus on work. Precise control of robot work to improve work efficiency. Focus on the user controller ergonomy for remote control

Steps of the project

01 User Steps

- · Autonomous gestion

- · Direct Control

02 Robot Gestion

- · Research & Ergonomy

- · Dual screen

- · Map gestion

- · Dashboard gestion

- · Emergency case

03 Robot Control

- · Research & Ergonomy

- · Tests & Experiments

- · Immersive bubble

- · New Robot

- · Inverse kinematics

- · Controller

Steps of control

Steps of control

Autonomous gestion & Robot Control

We have decided to separate the management of the robot in two distinct parts. The first one is the management of the robot on a computer and the second one where the user will have to go in an immersive bubble in order to control the robot with precision

Autonomous · Management & Gestion

During the management, the user will be informed and have access to the statistics of the robots.

He will be able to give them tasks that they will perform autonomously

Management on computer interface

Robot Control & Emergency

In case the robot is not able to perform an action by itself or in case of an emergency. The user enters into an immersion bubble in which he will be able to take control of a robot and complete the mission manually

Robot control & Emergency on immersive bubble

Steps of control

Analysis · Autonomous gestion & Robot Control

In order to move forward efficiently in our project. We defined the key points of the project. This allowed us later to focus on the most important elements avoiding to spend on details that would be considered useless for this level of development

Robot Gestion

Robot Gestion

Research & Ergonomy

Since the user will be mainly in front of a computer to manage the robots, it is essential that he is in a comfortable position that allows him to work for several hours without physical problems. The user will have at his disposal a double screen allowing him to have a fast visualization of the various elements essential to his work

Robot Gestion

Dual screen

The use of a dual screen is essential in our use case. The user will have to manage both live information on a map and the different notifications and information related to the robot’s actions

Confortable position & Quick Look Dual Screen

Robot Gestion

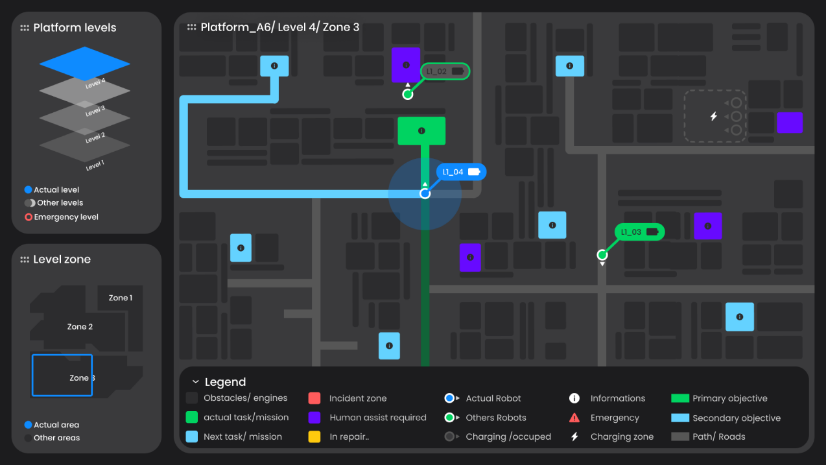

Left Screen · Map Gestion

The screen on the left, concerns the map and levels of an offshore platform. We have 3 distinct parts

The main one, the map where we can follow the movement of each robot in live, the mission it is carrying out and its next mission. A legend is available to facilitate the understanding of the elements displayed on the screen

The choice of platform levels. With a simple click we can access the other levels but also visualized at which level the user is currently.

The indication of the zone that is being visualized. Indeed, some levels can be too big to be well visualized. If the user zooms in on an area, he will be able to visualize its location at a glance.

Left Screen · Map Gestion

Robot Gestion

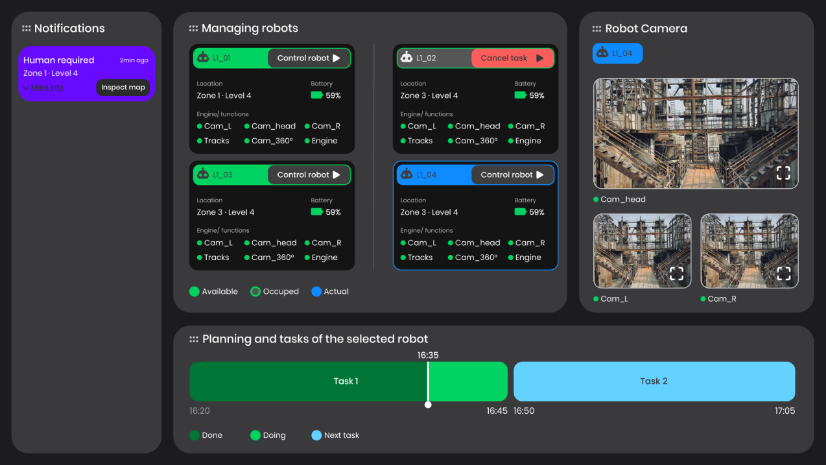

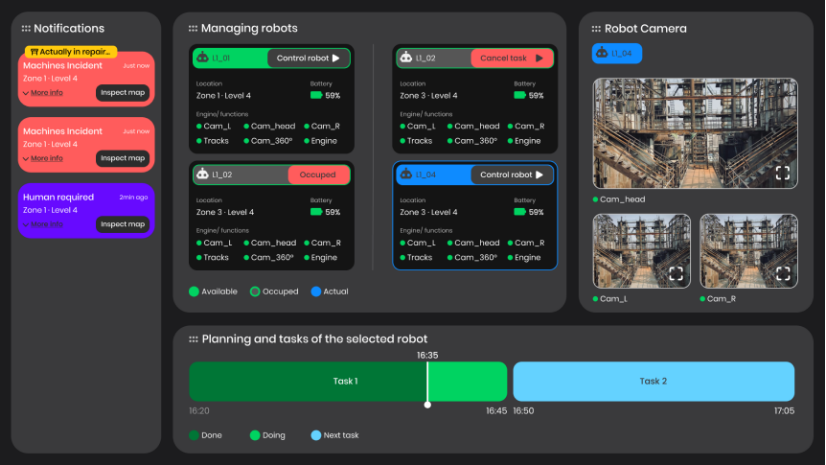

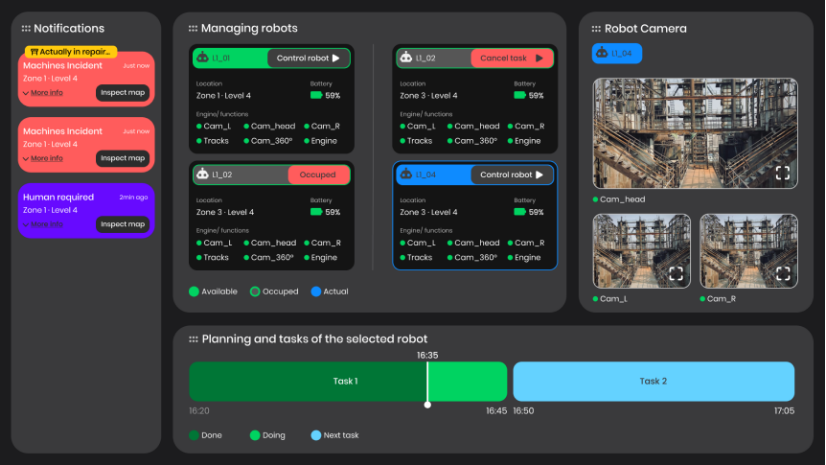

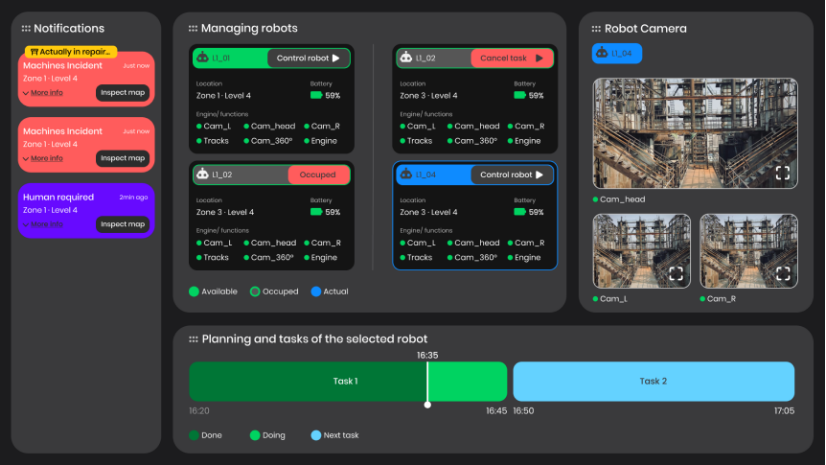

Right Screen · Dashboard Gestion

The right screen is used to receive notifications and important information about the robots

Notifications. Here, it will receive requests for support from the robots in case a robot encounters a problem or needs the assistance of a human

The robots management, presents the conditions of the different robots, if they are performing a task, if all its components are functional, its battery level and the possibility to cancel a task or to take its control

When he selects a robot, he can see its schedule and an approximation of the time it will take to complete its autonomous mission. The robot’s cameras will be useful to visualize what it is doing to verify that it is not making mistakes in its autonomous steps

Right Screen · Dashboard gestion

Robot gestion

Analysis · Dual Screen

After doing some user testing during our second rendering phase. We were able to verify that the direction we took is convincing. We were able to move forward and then dive into the use case where the user is facing an emergency

Robot Gestion

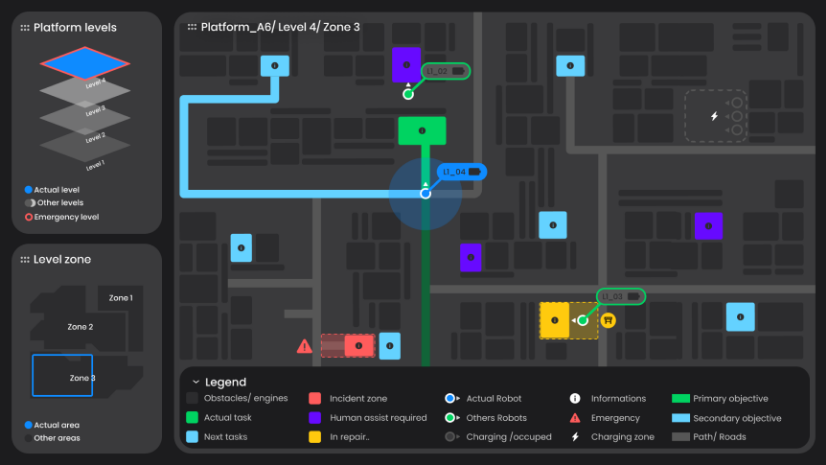

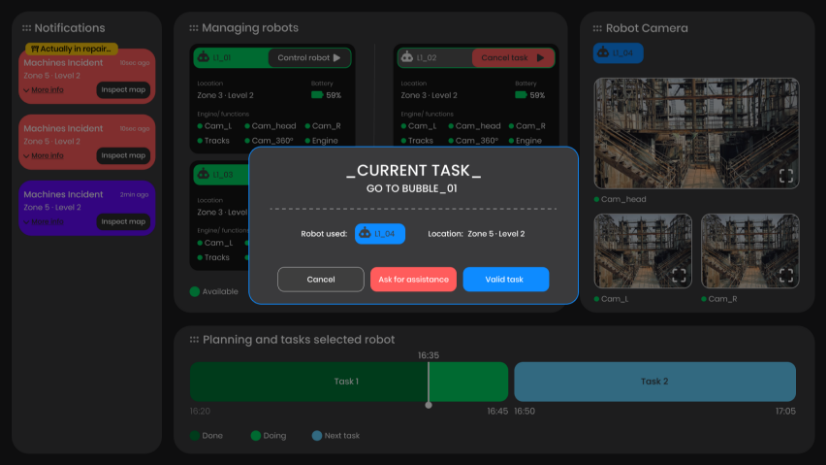

Emergency Case

In case of emergency, the user must be accompanied in a stressful situation but also be able to operate quickly and efficiently. For this, we have designed an intuitive and efficient user path. Important information will be highlighted while the user will receive instructions on the steps to follow

Robot Gestion

Left Screen · Indication Level and Position Problem

At a glance, the user will see the location of the problem. Moreover, if he clicks on the notification he will receive on the notification part of the right screen. The map will update and display the area in question and show the nearest robot that can be controlled to act as quickly as possible

Left Screen · Indication Level Problem

Robot Gestion

Right Screen · Notification & Going to the bubble control

The screen on the right will receive notifications about the emergency cases that need to be dealt with in priority. The user will know if one of his colleagues is already solving one of the current problems. To avoid having several people working on the same emergency. An “Inspect map” button allows the user to quickly visualize where the emergency is located in order to quickly know the affected area. Once the user has taken control of a robot, a notification tells the user in which immersive bubble he will have to go. Moreover, the screen is blocked until the user clicks on one of the buttons of the notification to avoid any risk of error

Right Screen · Problem notification

Right Screen · Going to the bubble control

Robot gestion

Analysis · Emergency Case

Working on a use case where the user is in a stressful situation was very interesting for us. We had to put ourselves in the place of these people to understand what happens in their head during this kind of situation. We understood that everyone reacts differently to a stressful situation but we found out that it was very important not to display information like an alarm or other elements that could add stress to the user. We therefore concluded that intuitive assistance was the best solution to follow and we are satisfied with the results of this proposed path

Robot Control

Robot Control

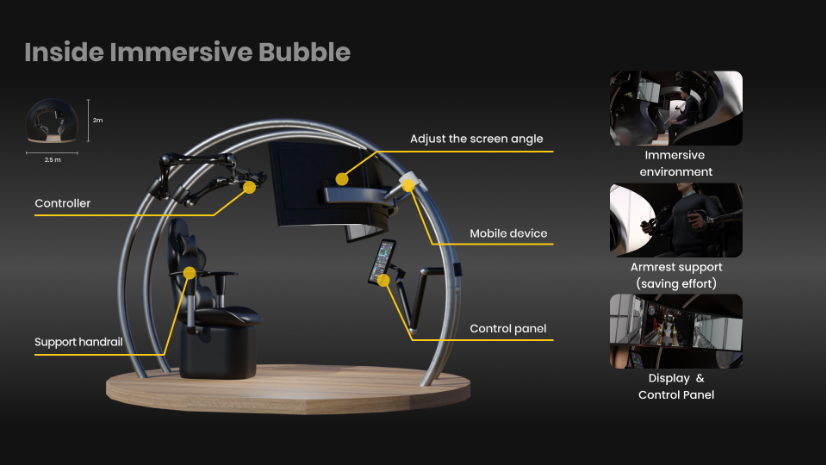

Immersive bubble Overview

Immersive bubble · Overview

Robot Control

Research & Ergonomy

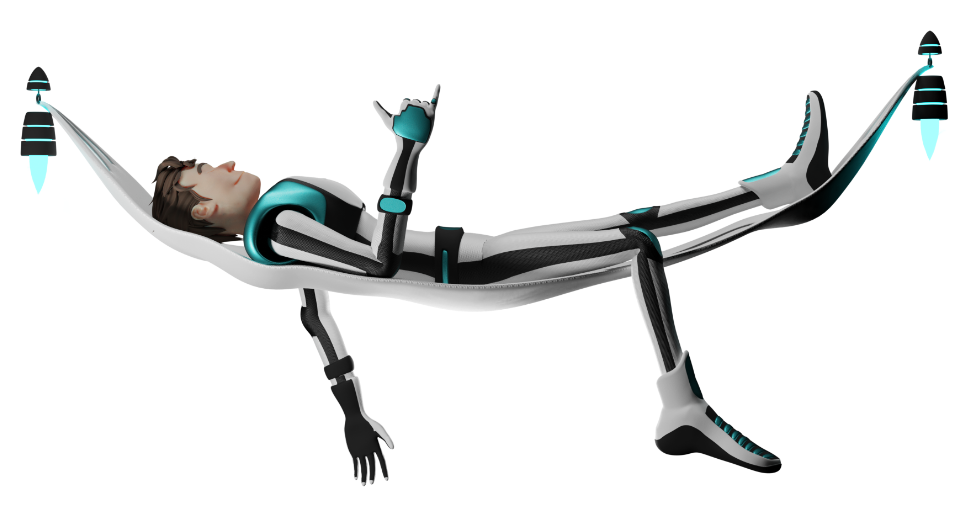

In order to propose an immersive bubble which will be used to control the robot without being disturbed by the external environment but also to act quickly and to have the least possible movement to make to take the control of the robot and then to operate as quickly as possible on the places of emergencies

Following a request from our teachers, the use of a virtual reality headset will not be proposed in this proposal, under the justification that a headset is not comfortable enough for a purpose that could last a certain period of time

Right Screen · Problem notification

Chirurgical exemple

In order to operate accurately from a remote location, we were inspired by surgeons who learn to operate on small areas without looking at their hands but by looking at screens. The screens zoom in on the important parts and bring a much better precision than our own eyes

Chirurgical exemple · Link reference

Exoskeleton arm

More and more exoskeletons are appearing in the industrial world. Our interest in the use of this technology will be in the interaction that the user will have with the robot. Like the force feedback that will avoid breaking fragile elements during precise manipulation that the robot will perform through the user’s movements

Exoskeleton arm · Link reference

Robot Control

Analysis · Research & Ergonomie

These researches are the strong point of our presentation, they will be used to justify our choices during the making of our technical solution. The combination of these two technologies and techniques will offer the user the possibility to act remotely with a particularly high level of precision

Robot Control

Tests & Experiments

Before starting to produce the 3D and elements that will serve as a proposal for this subject. We challenged our ideas. By gathering all the accessories at our disposal in the office, we created a prototype of the device we imagined. During this experiment, we pointed out the constraining points of this solution. By taking the time to understand the problems that the user will encounter, we were able to anticipate and design relevant solutions

Test & Experimentation

Robot control

Analysis · Tests & Experiments

Taking the time to test and challenge our ideas is essential before going into production. Because we often don’t think about the problems that will come in place later. This step avoids wasting time and having to go backwards. In addition, by putting ourselves in the place of the user, it becomes easier for us to understand his real needs. We have therefore evolved our ideas so that they match perfectly with the real needs of our users

Robot Control

Immersive Bubble

Once our experiments were completed, we made an immersion bubble (picture below without the isolating part) which answers all the problems we faced

It has three curved screens that display the robot's cameras. They are mobile and move to be aligned and adapt to the size of all people who want to use it. The external screens will be either useful to have a vision scope with a degree of 180° or to be able to view with precision the movements of the robot's arms

A control pad offers the possibility to switch from one robot to another with a simple click without having to go back to the computer

The controllers are supported by exoskeletons arms that will allow to make precise movements but also to keep a fixed position if needed

The seat as well as the screens are adjustable and allow the user to sit in many different ways in order to use the controller in a multitude of combinations. Adjustable elbow rests will be useful to rest the user's arms but also to gain precision during certain movements

Inside Immersive Bubble

Robot Control

Controller

The controllers are the most complex part of our project. We made sure that they have an intuitive handling. They have two different modes, each with its own purpose. The first one is to take direct control of the robot’s movements but also of the arms manipulation. The second one is to manage the robot in order to access to more complex manipulation or even to access to difficult areas even for humans

We were inspired by virtual reality controllers because they not only allow to translate the hand movement but also through the use of buttons to offer a scope of functionality much more important than a simple video game controller or a keyboard/mouse

The user will be able to view on the pad the instruction of the controllers presented below to remember the use of the different buttons

Robot Control

Mode 1 · Robot Control

Like its name suggests, the first mode will have the function of controlling the robot. The figure below shows the functionalities of the different buttons and their uses

For example, the joysticks will be used to move the robot while the arm movement will be used to take control of the arms. In the same way, if the user wants to move the arms, he must keep his fingers pressed on the back of the joysticks. This will not disturb him during the manipulations and will also help him not to make mistakes in the manipulation

The force feedback on the triggers is used to feel the power that the robot uses during its manipulations. A vibration that is increasingly strong is added to this to make the user understand that he is involving too much force in his movement and that he must release the pressure to avoid breaking what he manipulates

The switch mode button allows you to switch to Mode 2 with a single click or, if you keep it pressed, to switch to Driving mode. This function has been implemented because it allows to block the arms of the robot as close as possible to its body in order to move on the platform without the risk of colliding with its environment

Controller · Mode 1

Robot Control

Mode 2 · Robot Management

When the user switch the mode, the functions of the buttons will also change at the same time

This second mode will be used to position and adjust the position, the rotation angle of the arms and the display of the cameras

All this will offer the user a precise management of the angle of view and the use of the arms. He will be able to see angles of view that are difficult to access for a human but also to reach with his arms objects that are difficult to access

Another feature that is related to reverse kinematic and the “Lock/Unlock rotation angle arm” feature will be presented below in more detail

Controller · Mode 2

Robot control

Analysis · Immersive Bubble & Controller

The creation of the global use of the immersive bubble as well as the use of the controllers was a real challenge. Following the tests that we did by simulating the control of a robot, we deduced a large number of functionalities and kept only those that were essential in order to simplify the use of the controllers as much as possible. We have avoided that the controllers are indigestible for the user and too complicated from the first use by keeping intuitive interactions that we can find in VR games

Robot Control

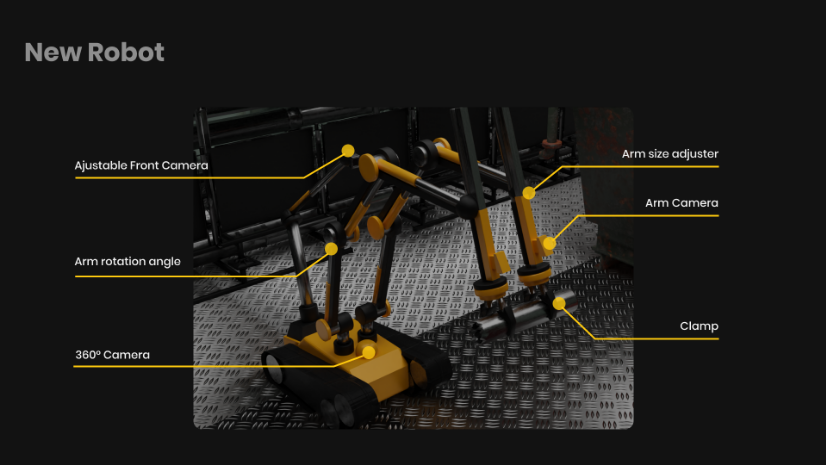

New Robot

In parallel of the controllers and the immersion bubble. We have imagined a robot that is capable of performing simple tasks in an autonomous way via artificial intelligence, but also of recharging itself in a station provided for this purpose

It has an arm for the central camera that represents its head and therefore its main vision. To complete its view and have a better visibility of its environment, a 360° camera has been added to have a complete view, which will avoid the user or the robot itself to collide with obstacles that he would not have seen

It also has two arms that are adjustable either on the angle of rotation or in length. In this way it can access any type of object around it, whether it is high or lower than its center of gravity. It will be possible for him to reach objects difficult to access even for a human

Clamps at the end of his two arms are used to grip the objects or handles that he will encounter during these missions. These clamps are connected to the force feedback of the joysticks. The user will have a direct feedback of the force he uses when using these clamps

New Robot Description

Robot Control

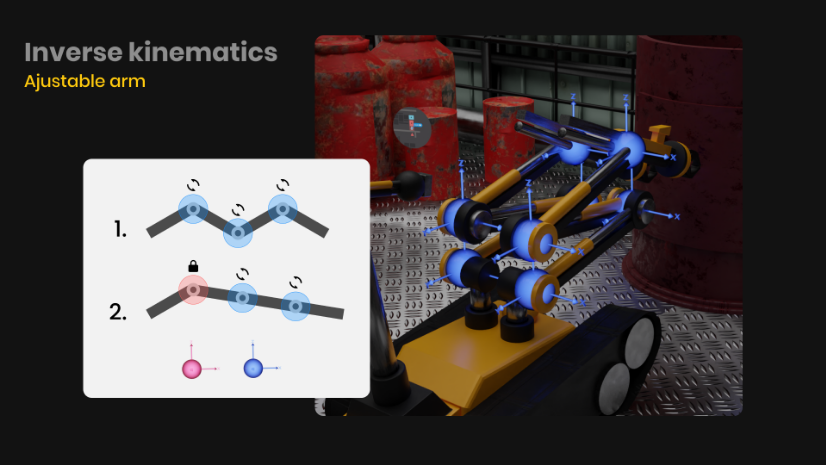

Inverse Kinematics

The use of Reverse Kinematics makes sense for this project.

In order to understand, the Reverse Kinematics as on the two pictures below are used to control the arms of the robot in a natural way. If we move a part of the robot, the rest of its joints will follow at the same time

This is convenient for the main use, when the user controls the arms as well as the hands.

But also when he will manage and adjust the rotation angles of the robot as explained in Mode 2.

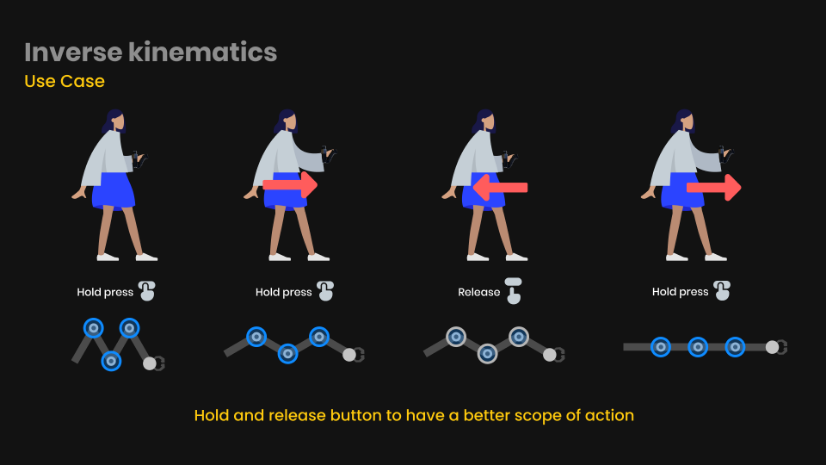

Two examples are presented below, like the adjustment of the arms or a use case that shows an interesting functionality of the arm

Thanks to the adjustment of the arms, he can control the angle of each joint of the arms, block their positions and therefore have access to even more complex areas

The use case explains that the user is not limited to the size of his own arms but can extend the arm and have access to a longer distance, which will be very practical in some cases as presented in the video presentation

Adjustable arm · Inverse Kinetics

Use case · Inverse Kinetics

Robot control

Analysis · Inverse Kinematics

Once again, the design of the robot’s functionalities was a real challenge. We could not be on the spot to analyze the different use cases that the robot will encounter during its missions. So we did some research and imagined the missions it would have to accomplish. Based on this we were able to establish what the robot should be able to do

We wanted it to have a handling similar to a humans with the movement of the head and arms so that its control is intuitive but also that it has the possibility to have access and be able to realize movements that would be almost impossible for a human. This combination of these two, have given birth to this new robot that will be very useful during remote manipulation

Solution

Robot Control

Video Presentation

To demonstrate the relevance of our robot, we made a video that presents a use case where the user following an emergency notification will take control of a robot and solve the problem manually. Each step for the realization of the mission is presented and demonstrates that its functionalities will be useful to the realization of their tasks

Solution

Evaluation

Following the research and the development of our solution for the topic “The relation between a robot and a human in a remote working environment”. We have been in contact with professionals in this field. Following their very positive feedback we can affirm that our solution is working and will allow employees who work in risky areas to not risk their lives by controlling robots remotely. Unfortunately, this solution is only fictional and we have no results from real use.

solution

Lesson to be learned

We are happy to have been able to work on this case however it has some limitations that we cannot leave out of consideration

We are aware of what this kind of solution can cause. Like the loss of jobs of those people who work in risky areas by replacing them with robots. This is not our goal. Thinking about new interactive solutions that will protect them from danger while allowing them to do their work remotely is our vision for this project

We learned a lot along the project. The discovery of this type of user has taken us out of our comfort zone. The daily life of people who risk their lives makes us think and if we can, with the help of this project, offer them a daily life where they won’t be afraid to go to work that would be a great success

This project was very instructive and will be useful in our future projects

You may also like

This doesn't have

to be over!

Let’s chill and chat about your project

Send me an

© 2021 Robin Exbrayat · All rights reserved